Image via Coroflot.

I’ve long been fascinated by the interface for virtual worlds, and have just as equally been convinced that while the software UI itself has massive room for improvement, the big breakthroughs might come from other devices.

I wrote some time ago about Microsoft’s purchase of 3DV Systems, quoting an Israeli news report:

“3DV Systems develops “virtual reality” imaging technology for digital cameras that it sells, called ZCams (formerly Z-Sense). Its main targets are the gaming market (”enjoy a genuinely immersive experience,” the company says on its Web site).

Now that graphics have become so advanced, it explains, the key to making a real difference lies in how you can control the game. The ZCam lets players control the game using body gestures alone, rather as PlayStation’s EyeToy does, or Microsoft’s Vision or Nintendo’s Wii. 3DV Systems argues that its system is better than these, adding that you don’t have to wear anything.

Microsoft apparently plans to use 3DV Systems’ technology in its own gaming technology, probably in the Xbox 360.”

The result was Microsoft’s Project Natal:

As I wrote at the time: “I’ve long proposed that there’s a coming wave of innovation based on rethinking the interfaces to virtual worlds, whether they’re touch screens on the living room wall, 3D cameras, or controls based on brain waves. As virtual worlds and games become unshackled from the mouse/screen paradigm, there will be richer opportunities for augmented reality and information spaces and new ideas about how to navigate within virtual world environments and what that implies for how information space is constructed.”

On the other hand, wireless controllers only go so far, and frankly we may not always want to shadow box with prims.

But some concept work has me wondering whether the emergence of surface technology doesn’t open another path to how we interact with virtual worlds. Surface technology will allow us to unshackle the keyboard from, well, plastic letters and such.

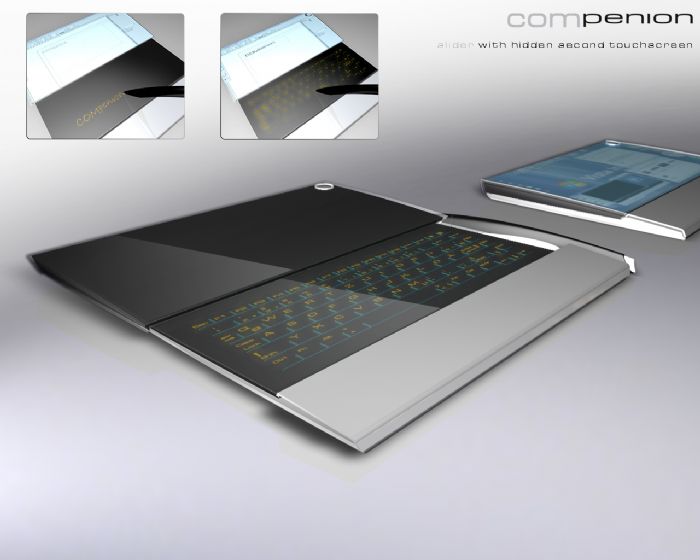

Take this concept laptop via Coroflot:

If the keyboards, or interface is a touch screen, then the things you touch are swappable.

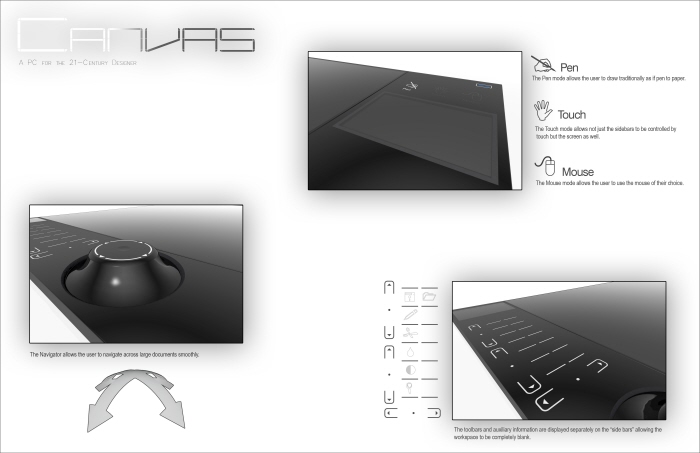

I was taken by this concept by Kyle Cherry, called Canvas and developed specifically to demonstrate an interface for designers:

Now, imagine that the “interface” could go from blank slate:

To full screen world interface:

I’m not much of a designer. Er, I’m not at all a designer. But I imagine the touch screen having a series of buttons along the side: build, communicate, and world, say. And pressing the buttons initiates a new “keyboard” with the tools for prims, or your friends list, at your fingertips.

I’m not sure anyone’s going to pay the kinds of dollars that it would probably take today to create a swappable Second Life keyboard – but as touch and surface technology takes over from our traditional keyboard, I wonder how far-fetched it is to think that the challenge will eventually shift from organizing the screen, to organizing the keyboard.

If you could have a dynamically generated touch keyboard, one where you can swap out interfaces – one for chat, one for building, maybe one for shopping or something – what would you put on it? Is changing the interface from the eye/screen to the fingertips too big a leap?

http://www.artlebedev.com/everything/optimus-tactus/

Until there is no acceptable speech recognition, keyboard stay here.

Gestures and touch screens are useless for computer use.

You can play stupid games and make simple navigation out of gestures/touches but that’s all.

And machines are way far from being able to understand even a half-sentence if it’s arbitrary like ‘hey, open second life and log me in while i’m out in the kitchen.’.

Maybe you should rather re-program people to speak in computer-understandable way.

As for keyboards, you should rather make vertical keyboards and mice (see google for existing products) to preserve your hand’s health. Oh, you can’t see the nice led displays then…